The questions enterprises ask about their systems have fundamentally changed.

In 2020, teams asked: “Is my system up?”

In 2026, they’re asking: “Why is the user experience degrading? Where exactly is the problem? How fast can we fix it?”

This evolution from traditional monitoring to full-scale observability isn’t just a technical upgrade—it’s a survival strategy. Cloud-native architectures, microservices proliferation, AI-driven applications, and unforgiving user expectations have made the old playbook obsolete.

At Cavisson Systems, we witness this transformation daily as enterprises abandon siloed metrics for unified visibility across applications, infrastructure, logs, and real user experiences.

Why Traditional Monitoring No Longer Works?

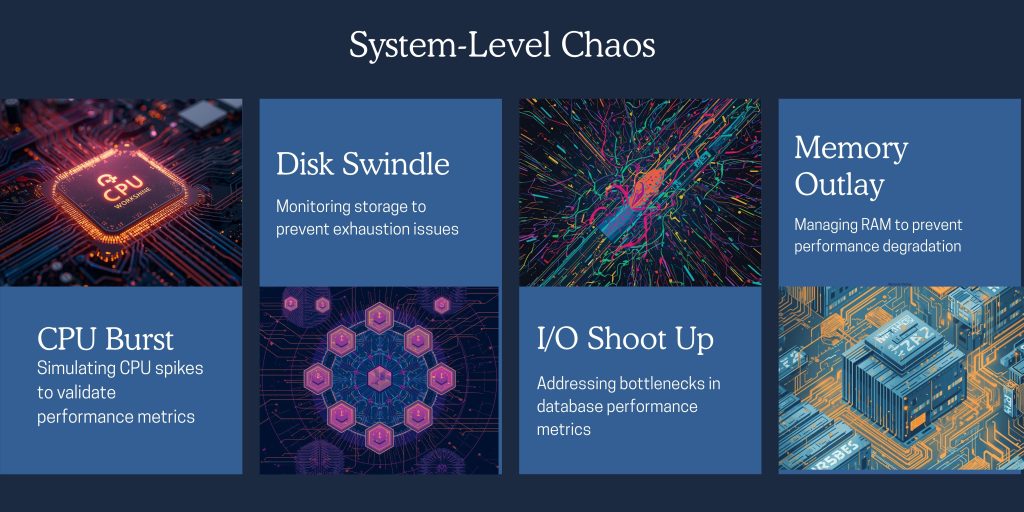

Traditional monitoring served us well for decades. It tracked known metrics—CPU usage, memory consumption, response times, uptime—against predefined thresholds. But today’s digital environments have outgrown this approach.

The new reality:

- Architectures are distributed, containerized, and ephemeral

- Failures cascade in non-linear, unpredictable ways

- Performance issues emerge from hidden dependencies

- User experience degrades long before alerts fire

The critical difference:

- Monitoring tells you what happened

- Observability tells you why it happened

Observability: The New Foundation for Digital Resilience

Modern observability rests on three interconnected pillars:

- Metrics – Quantitative performance indicators that reveal trends and anomalies

- Logs – Context-rich system events that explain what’s happening beneath the surface

- User Experience Data – How real users and synthetic journeys actually behave in production

True observability doesn’t just collect these signals—it weaves them into a coherent narrative, enabling teams to move from detection to resolution with speed and confidence.

The Cavisson Observability Ecosystem

Cavisson Systems delivers unified observability that empowers enterprises to proactively manage performance, reliability, and digital experience across their entire stack.

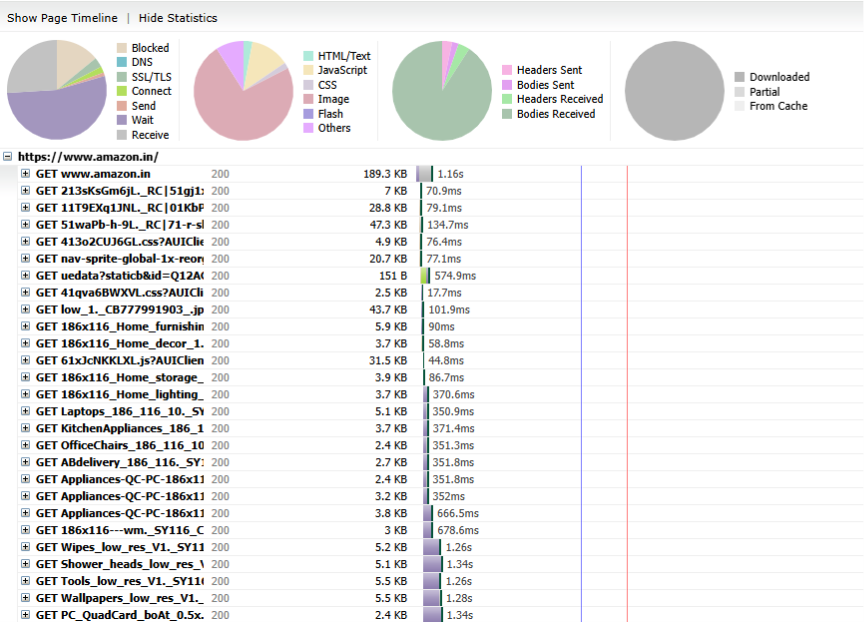

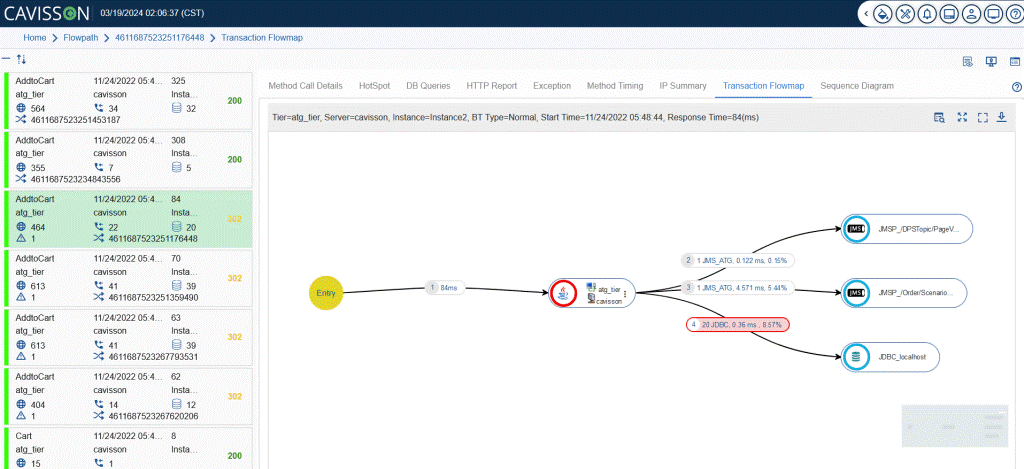

1. Application Performance Monitoring with NetDiagnostics

Your applications are the heartbeat of digital business. Any slowdown directly impacts revenue, trust, and competitive position.

NetDiagnostics provides:

- Deep visibility across all application tiers

- Real-time transaction tracing through complex architectures

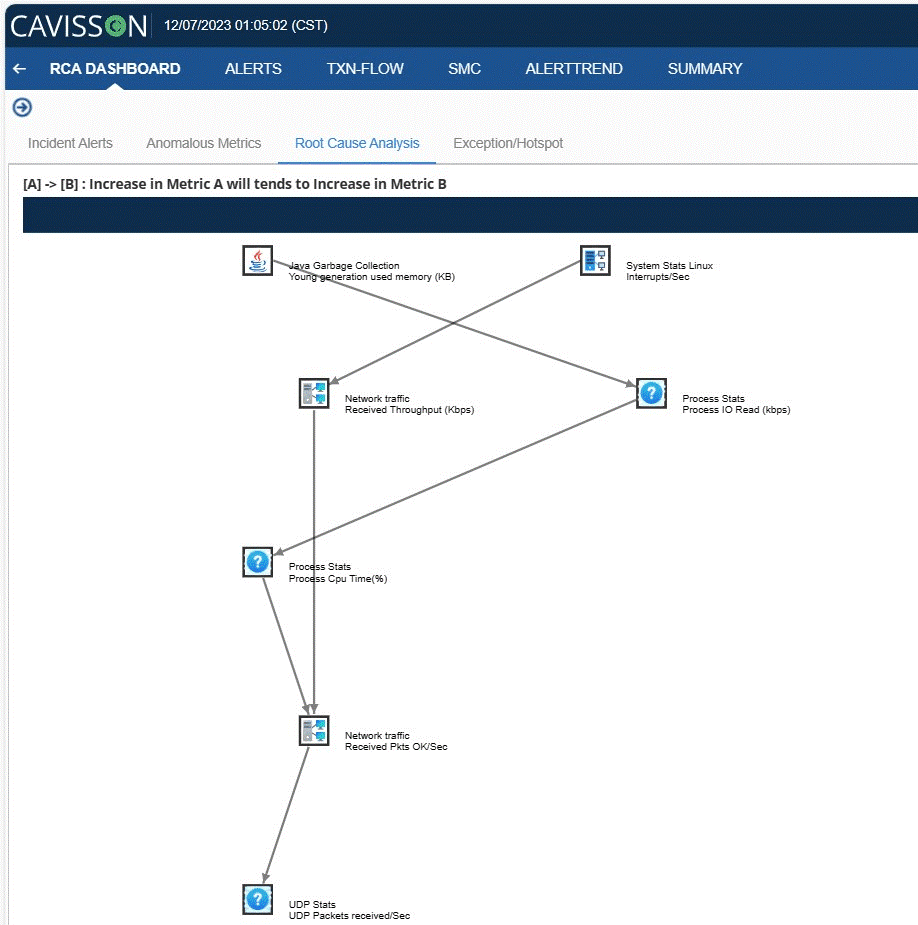

- Intelligent anomaly detection that learns your normal

- Rapid root-cause analysis that pinpoints issues in minutes, not hours

The result: Faster Mean Time to Resolution (MTTR) and the confidence to deploy rapidly without fear.

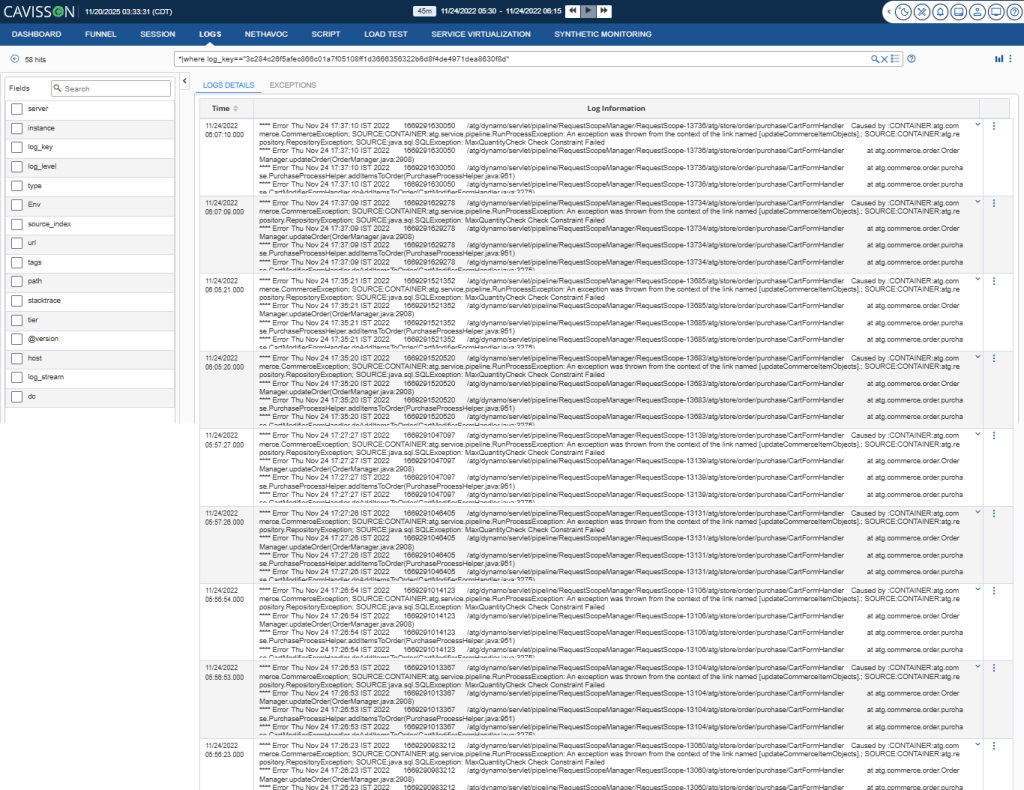

2. Log Intelligence with NetForest

Logs contain the richest operational truth in your environment—yet they’re often the most underutilized resource.

NetForest transforms log chaos into clarity by:

- Centralizing logs across distributed systems into a single source of truth

- Correlating log data with application performance metrics

- Enabling lightning-fast diagnosis during critical incidents

The result: Your team shifts from reactive firefighting to proactive problem prevention.

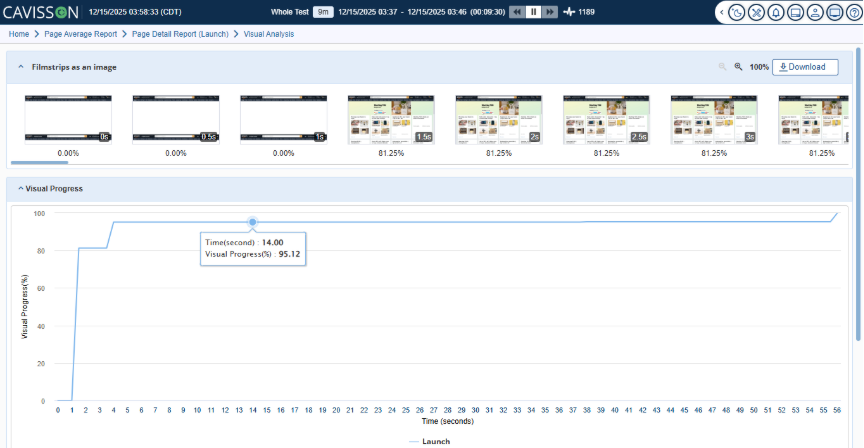

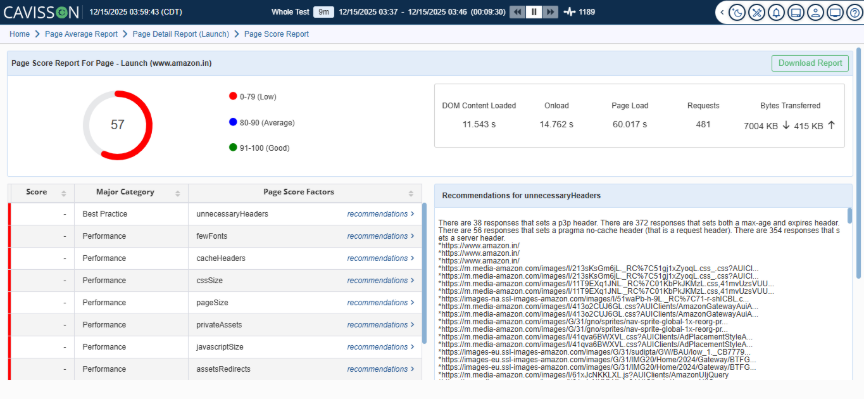

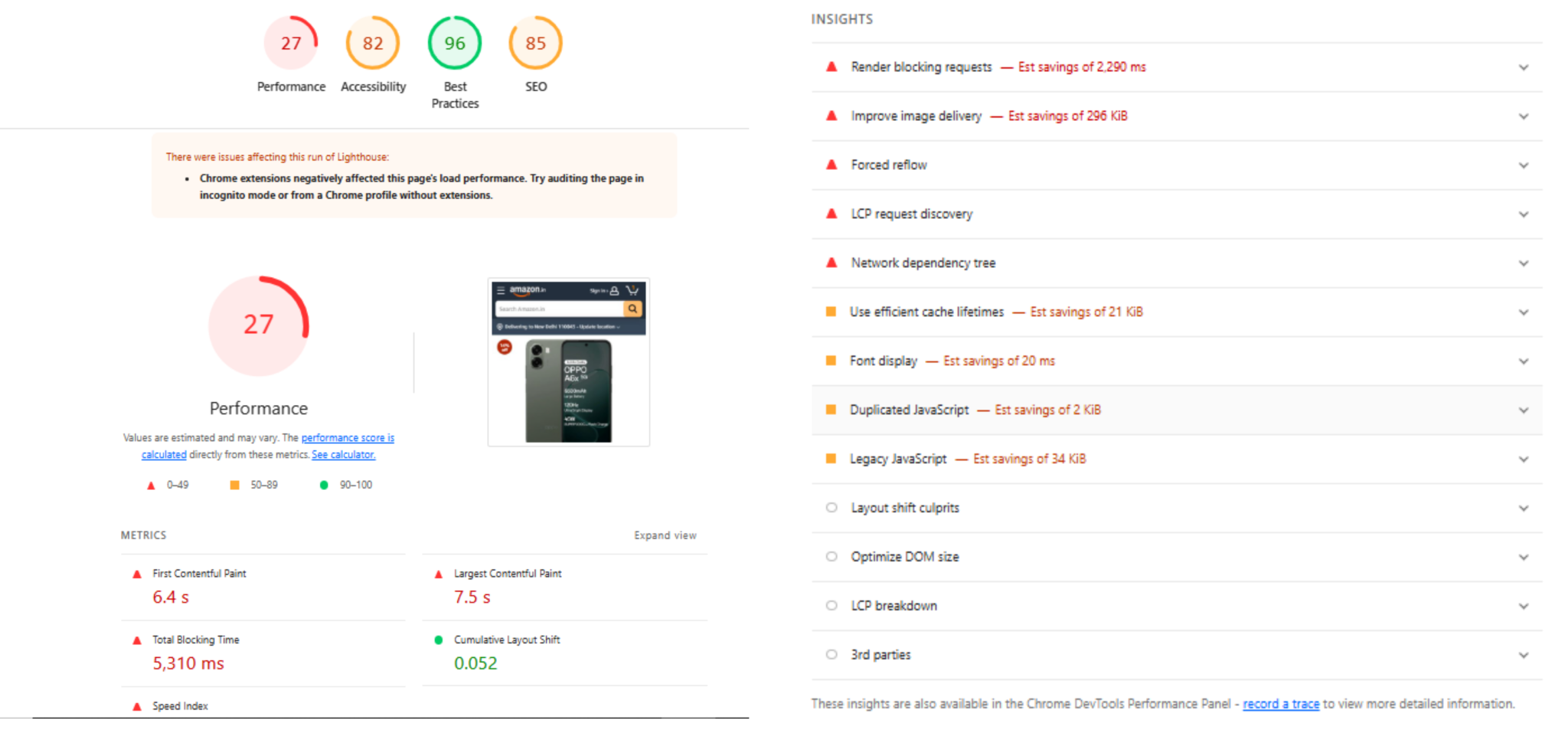

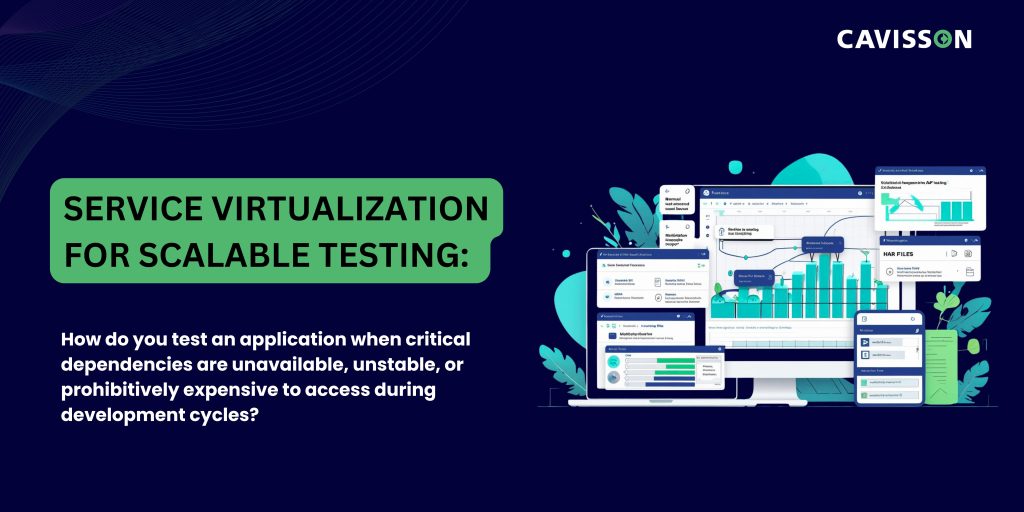

3. Experience-Driven Observability with NetVision

In 2026, user experience is the ultimate KPI. Backend metrics mean nothing if users are struggling.

NetVision bridges backend performance and real-world experience through:

Real User Monitoring (RUM): Understand actual user behavior across geographies, devices, browsers, and networks. See session-level issues as they happen.

Synthetic Monitoring: Proactively test critical user journeys 24/7, catching problems before customers ever encounter them.

The result: You detect and resolve experience degradation before it becomes a business crisis.

Monitoring vs. Observability: A Clear Comparison

Dimension | Traditional Monitoring | Modern Observability |

Focus | Known issues and expected failures | Unknown and emerging issues |

Data Sources | Metrics only | Metrics + Logs + User Experience Data |

Alert Strategy | Reactive threshold violations | Predictive, context-aware intelligence |

Visibility | Siloed by team and tool | End-to-end across the entire system |

Business Impact | Disconnected from outcomes | Directly tied to customer experience and revenue |

Modern enterprises don’t abandon monitoring—they elevate it into observability.

Why Observability Is Mission-Critical in 2026

Observability has evolved from optional to essential, driven by:

Technical complexity: Cloud-native architectures and microservices create intricate, dynamic environments where traditional monitoring goes blind.

Always-on expectations: AI-driven platforms and global user bases demand 24/7 reliability with zero tolerance for degradation.

Team collaboration: SRE, DevOps, and product teams need shared visibility to move fast without breaking things.

Competitive differentiation: In saturated markets, superior customer experience often determines the winner.

Organizations that invest in observability achieve faster innovation cycles, fewer production incidents, and stronger customer loyalty—measurable advantages that compound over time.

Final Thought: Observability as Business Strategy

Observability isn’t about deploying more tools. It’s about understanding your systems the way your customers experience them.

Here’s what sets Cavisson apart: NetDiagnostics, NetForest, and NetVision aren’t three separate products requiring three different logins, dashboards, and workflows. They’re a unified observability platform—purpose-built to work together seamlessly.

One platform. One interface. One source of truth.

When an application slows down, you don’t need to jump between tools to correlate metrics, logs, and user impact. Everything connects automatically. NetDiagnostics shows you the performance anomaly. NetForest surfaces the related log errors. NetVision reveals which users are affected and how severely.

This unified approach transforms how teams work:

- Faster root-cause analysis — no context-switching between tools

- Shared visibility across SRE, DevOps, and product teams

- Integrated workflows from detection to diagnosis to resolution

- Lower total cost of ownership — one platform instead of a patchwork of point solutions

Cavisson Systems enables enterprises to transition from reactive monitoring to intelligent observability—keeping performance, reliability, and experience aligned with business objectives. All from a single, unified platform.

Because in 2026, the question isn’t whether you monitor your systems.

It’s whether you truly understand them—completely, quickly, and confidently.

Ready to transform your observability strategy? Discover how Cavisson’s unified platform can help your enterprise move from visibility to insight to action—without the tool sprawl.