Why Your Production Systems Need Chaos Engineering?

In today’s hyper-connected digital landscape, system downtime isn’t just an inconvenience—it’s a business-critical disaster. A single minute of downtime can cost enterprises thousands of dollars, erode customer trust, and damage brand reputation. The question isn’t whether your systems will fail, but how well they’ll survive when they do.

That’s where NetHavoc by Cavisson Systems comes in—a comprehensive chaos engineering platform designed to help organizations build truly resilient, fault-tolerant systems before failures impact real users.

What is NetHavoc? Understanding Chaos Engineering

NetHavoc is Cavisson Systems’ enterprise-grade chaos engineering tool that enables DevOps and SRE teams to proactively inject controlled failures into their infrastructure. By simulating real-world failure scenarios in safe, controlled environments, NetHavoc helps identify architectural weaknesses, validate disaster recovery plans, and build confidence in system reliability.

The Chaos Engineering Philosophy

Chaos engineering operates on a simple but powerful principle: deliberately break things in controlled ways to understand how systems behave under stress. This proactive approach shifts reliability testing from reactive firefighting to predictive prevention.

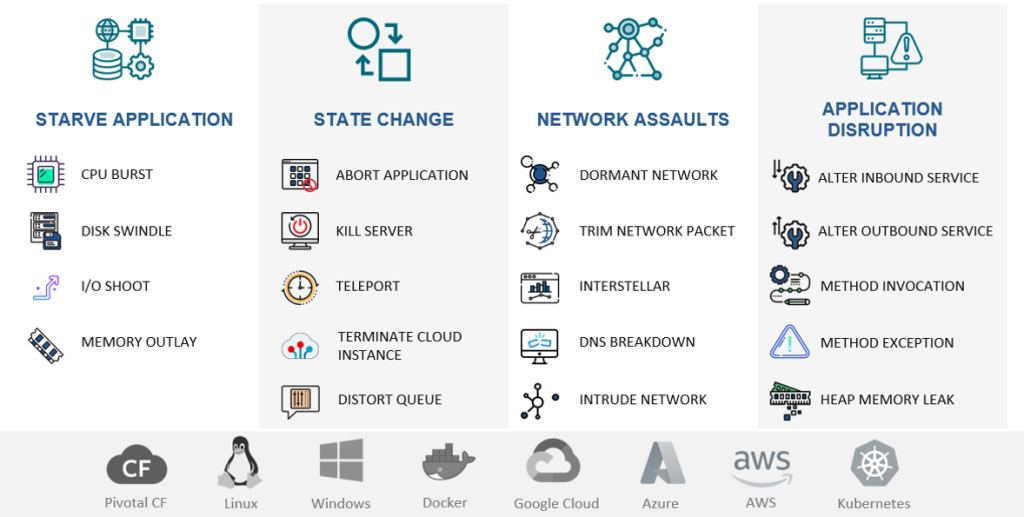

Comprehensive Multi-Platform Support

NetHavoc stands out with its extensive platform compatibility, ensuring chaos engineering practices can be implemented across your entire technology stack:

- Linux Environments: Traditional bare-metal servers and containerized workloads

- Windows Infrastructure: Enterprise applications and legacy services

- Docker Containers: Isolated application testing and microservice validation

- Kubernetes Clusters: Cloud-native orchestrated workloads and pod-level chaos

- Multi-Cloud Platforms: AWS, Azure, Google Cloud, and hybrid environments

- VMware Tanzu: Container orchestration for enterprise Kubernetes

- Messaging Services: Queue systems, event streams, and communication infrastructure

This universal compatibility means teams can implement consistent chaos engineering practices regardless of where applications run, eliminating blind spots in resilience testing

Four Pillars of Chaos: NetHavoc’s Experiment Categories

1. Starve Application

Test application resilience by simulating service disruptions including:

- Sudden service crashes and unexpected terminations

- Graceful and ungraceful restarts

- Service unavailability and timeout scenarios

- Dependency service failures

![]() Why It Matters: Application crashes are inevitable. NetHavoc helps ensure your orchestration platform detects failures quickly, restarts services automatically, and maintains service availability through redundancy.

Why It Matters: Application crashes are inevitable. NetHavoc helps ensure your orchestration platform detects failures quickly, restarts services automatically, and maintains service availability through redundancy.

2. State Changes

Validate system behavior during dynamic conditions:

- Configuration changes and rollbacks

- State transitions and environmental modifications

- Feature flag toggles and canary deployments

- Database schema migrations

![]() Why It Matters: Modern systems constantly evolve. Testing state changes ensures deployments don’t introduce instability and that rollback procedures work when needed.

Why It Matters: Modern systems constantly evolve. Testing state changes ensures deployments don’t introduce instability and that rollback procedures work when needed.

3. Network Assaults

Inject network-related failures—the leading cause of production incidents:

- Latency injection (simulating slow networks)

- Packet loss and corruption

- Bandwidth throttling and restrictions

- DNS failures and connectivity issues

- Network partitioning (split-brain scenarios)

![]() Why It Matters: Distributed systems live and die by network reliability. NetHavoc’s network chaos experiments validate that timeout configurations, retry policies, and circuit breakers function correctly.

Why It Matters: Distributed systems live and die by network reliability. NetHavoc’s network chaos experiments validate that timeout configurations, retry policies, and circuit breakers function correctly.

4. Application Disruptions

Test application-level resilience:

- Third-party API failures and slowdowns

- Database connection issues

- Cache failures and invalidation

- Integration point breakdowns

![]() Why It Matters: Applications rarely fail in isolation. NetHavoc ensures your systems gracefully degrade when dependencies experience issues.

Why It Matters: Applications rarely fail in isolation. NetHavoc ensures your systems gracefully degrade when dependencies experience issues.

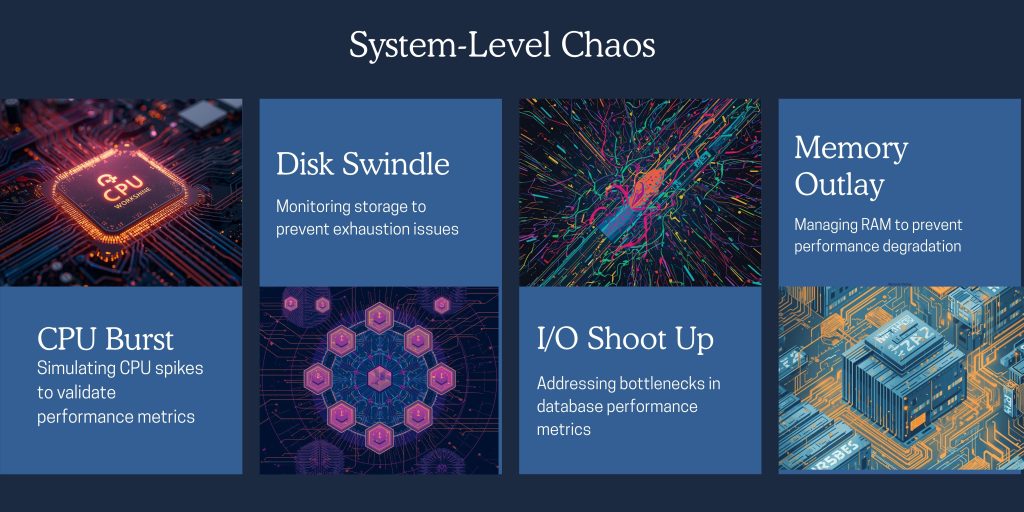

Precision Chaos: NetHavoc’s Havoc Types

➣ CPU Burst: Performance Under Pressure

Simulate sudden CPU consumption spikes to validate:

- Auto-scaling policies and thresholds

- Resource limit configurations

- Application performance degradation patterns

- Priority-based workload scheduling

Use Case: E-commerce platforms can test whether checkout services maintain performance when recommendation engines consume excessive CPU during traffic spikes.

➣ Disk Swindle: Storage Exhaustion Testing

Fill disk space to verify:

- Monitoring alert triggers and escalation

- Log rotation and cleanup policies

- Application behavior at storage capacity

- Disk quota enforcement

Use Case: Prevent the common “disk full” production disaster by ensuring applications handle storage exhaustion gracefully and monitoring alerts fire before critical thresholds.

➣ I/O Shoot Up: Disk Performance Bottlenecks

Increase disk I/O to identify:

- I/O bottlenecks affecting application performance

- Database query performance under stress

- Logging system impact on applications

- Storage system scalability limits

Use Case: Database-heavy applications can validate that slow disk I/O doesn’t cascade into application-wide slowdowns.

➣ Memory Outlay: RAM Utilization Stress

Increase memory consumption to test:

- Memory management and garbage collection efficiency

- Out of Memory (OOM) killer behavior

- Application memory leak detection

- Container memory limit handling

Use Case: Ensure Kubernetes automatically restarts memory-leaking containers before they affect other workloads on the same node.

Advanced Configuration Capabilities

➣ Flexible Timing Control

Injection Timing: Start chaos immediately or schedule with custom delays.

Experiment Duration: Set precise timeframes (hours:minutes: seconds) for controlled testing.

Ramp-Up Patterns: Gradually increase chaos intensity to simulate realistic failure progressions.

➣ Sophisticated Targeting

Tier-Based Selection: Target specific application tiers (web, application, database).

Server Selection Modes: Choose specific servers or dynamic selection based on labels.

Percentage-Based Targeting: Affect only a subset of the infrastructure for gradual validation.

Tag-Based Filtering: Use metadata tags for precise experiment scoping.

➣ Granular Havoc Parameters

CPU Attack Configuration:

- CPU utilization percentage targets

- CPU burn intensity levels (0-100%)

- Specific core targeting for NUMA-aware testing

Resource Limits:

- Memory consumption thresholds

- Disk space consumption limits

- Network bandwidth restrictions

➣ Organization and Governance

Project Hierarchy: Organize experiments by team, service, application, or environment.

Scenario Management: Create reusable chaos templates for common failure patterns.

Access Controls: Role-based permissions for experiment execution and scheduling.

Audit Trails: Comprehensive logging of who ran what experiment.

Notifications and Alerting

Configure multi-channel notifications:

- Email alerts for experiment start and completion

- Slack/Teams integrations for team collaboration

- Webhook support for custom integrations

- PagerDuty integration for on-call awareness

➣ Intelligent Scheduling

Recurring Experiments: Schedule daily, weekly, or monthly chaos testing.

Business Hours Awareness: Run experiments during specified time windows.

CI/CD Integration: Trigger chaos tests as part of deployment pipelines.

Automated Game Days: Schedule comprehensive resilience exercises.

Real-World Case Study: The CrowdStrike Outage of July 2024

The Largest IT Outage in History – And Why Chaos Engineering

On July 19, 2024, the world witnessed what has been described as the largest IT outage in history. A faulty software update from cybersecurity firm CrowdStrike affected approximately 8.5 million Windows devices worldwide, causing catastrophic disruptions across multiple critical sectors.

The Devastating Impact

The financial toll was staggering. Fortune 500 companies alone suffered more than $5.4 billion in direct losses, with only 10-20% covered by cybersecurity insurance policies.

Industry-Specific Damage:

- Healthcare sector: $1.94 billion in losses

- Banking sector: $1.15 billion in losses

- Airlines: $860 million in collective losses

- Delta Air Lines alone: $500 million in damages

The outage had far-reaching consequences beyond financial losses. Thousands of flights were grounded, surgeries were canceled, users couldn’t access online banking, and even 911 emergency operators couldn’t respond properly.

What Went Wrong: A Technical Analysis

CrowdStrike routinely tests software updates before releasing them to customers, but on July 19, a bug in their cloud-based validation system allowed problematic software to be pushed out despite containing flawed content data.

The faulty update was published just after midnight Eastern time and rolled back 1.5 hours later at 1:27 AM, but millions of computers had already automatically downloaded it. The issue only affected Windows devices that were powered on and able to receive updates during those early morning hours.

When Windows devices tried to access the flawed file, it caused an “out-of-bounds memory read” that couldn’t be gracefully handled, resulting in Windows operating system crashes—the infamous Blue Screen of Death that required manual intervention on each affected machine.

The Single Point of Failure Problem

This incident perfectly illustrates what chaos engineering aims to prevent. As Fitch Ratings noted, this incident highlights a growing risk of single points of failure, which are likely to increase as companies seek consolidation and fewer vendors gain higher market shares.

How NetHavoc Could Have Prevented This Disaster

If CrowdStrike had implemented comprehensive chaos engineering practices with NetHavoc, several critical safeguards could have been in place:

- State Change Validation NetHavoc’s State Change chaos experiments would have tested software update deployments in controlled environments, revealing how systems respond to configuration changes before production rollout.

- Staggered Rollout Testing Using NetHavoc’s scheduling and targeting capabilities, CrowdStrike could have simulated phased update deployments, discovering the validation system bug when it affected only a small percentage of test systems rather than 8.5 million production devices.

- Graceful Degradation Validation NetHavoc’s Application Disruption experiments would have tested whether systems could continue operating when security agent updates fail, potentially implementing fallback mechanisms that prevent complete system crashes.

- Blast Radius Limitation NetHavoc’s granular targeting features enable testing update procedures on specific server groups first, exactly the approach CrowdStrike later committed to implementing after the incident.

- Automated Rollback Testing Chaos experiments could have validated automatic rollback procedures when updates cause system instability, ensuring recovery mechanisms work before production deployment.

Conclusion: Embrace Chaos, Build Confidence

In the complex landscape of distributed systems in 2025, system reliability directly determines business success. Users expect perfect uptime, competitors exploit your downtime, and outages cost more than ever before.

NetHavoc by Cavisson Systems provides the comprehensive chaos engineering platform needed to build truly resilient systems. By proactively discovering vulnerabilities, validating assumptions, and continuously testing resilience, NetHavoc transforms uncertainty into confidence.

When failures occur—and they will—your systems will respond gracefully, your teams will react swiftly, and your users will remain unaffected. That’s not luck; it’s chaos engineering with NetHavoc.